Yesterday we received an invitation to sign up to a beta of EC2: Elastic compute cloud, the new service from Amazon to provide virtual servers integrated with their S3 service.

When I heard the first time about EC2, I thought immediately on those slow, overpopulated servers, where users battle for the resources of a few processors and a few gigabytes of memory. Yeah, you can have a server for $10 a month, but that is hardly enough for a personal blog. But as my passion for online games to beta test them, I love to beta test Amazon stuff, is always refreshing and ahead of the rest! Needless to say, if Amazon decides to release a service like this, is because is proud enough of it.

How it works?

Is basically something like a repository of file system images (Stored in S3) that can be installed and mounted into a virtual server on demand, in less than two minutes. You can upload your own images and make a virtual server of your web server, your database, or whatever you want. Thanks to a powerful (and safe) set of tools to create instances of those servers, creating clusters has never been that easy! Once the image has been instantiated on a server, you can access there using SSH and configure the firewall to allow traffic to some ports.

For every hour (or fraction) that you have your instance turned on, they charge $0.10 plus $0.20 per GB of traffic outside the EC2 network plus $0.15 per gigabyte/month used in S3. It will not be a $10 virtual server but neither the costs/risks of a dedicated server.

What can we load there?

They state that you can load any distribution as long as is compatible with kernel 2.6. And they actively support Redhat Fedora 3 and 4. I tried to make my image of a Debian, but I was not able to after creating one with debootstrap. I´m not a big systems guru, so probably I forgot some step that made that image unbootable. The sad thing is that I lost 2 hours to build, compress and upload the image, for nothing. Following their guidelines I managed to build an image copy of a fedora that worked nicely.

It is persistent the information that we store in that server?

Sadly the answer is: only meanwhile is turned on. If you want to have some permanent file system you have overuse S3 or use a distributed file system within other instances. They also state that, although your images are safe in S3, instances can fail and you would lose all the files stored in that instance (databases, files, configs, logs, …). There is a tool to make an image of your system while turned on, and send it as an image to S3 ready to be used again or to create a new instance.

How fast is it?

My feeling is just a bit slower than a real dedicated server. It feels fast, and it feels uncapped.

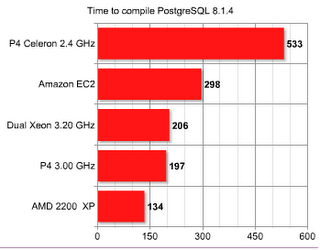

I’ve made a small benchmark of a few systems I have around the world to see how much time takes to compile a PostgreSQL 8.1.4.

Here are the results:

Even when is 1/3 slower than a dual Intel Xeon or a P4 with a 64 bits optimized kernel, it just means that we need to forget the word “virtual” when talking about speeds. Those are quite real speeds.

Surprisingly, our old AMD with FreeBSD rocks! Not even when the CPU is clocked at 1.80 GHz and its 3-4 years old.

What do I get?

Basically, when your budget can't pay reliability, EC2 is the answer! Forgive that storage is volatile, that can be solved easily making a shared, distributed file system. Once a system works, you make an image and it will always work, and you can clone it as many times as you want. You even have a parameter when you instantiate an image to tell the number of them you want! “Today I´m going to eat 8 web servers, 4 database and 2 application servers”. No one can beat that efficiency in less than 2 minutes, on demand.

Some curiosities…

Not surprisingly, the CPUs are Amd Opterons 250 (or that is what appears on /proc/cpuinfo). What I still don’t know is how it works internally. Is just a CPU assigned to my instance or they are really doing virtualization of a bigger cluster?

The servers come with a gentle amount of memory: 1.75 GB, and plenty of “temporally” hard disk space: 160 GB.

All the tools for building the file system images, signing and publishing them to S3 are made with Ruby.

I’m not really sure, but looks like you can't assign an static IP address to a server. Neither is guaranteed that an image will receive always the same IP address.

Connectivity of the servers is extremely good: ping to google.com is 2ms!

The test

To see how it works with real stuff, I made an exact copy of our Pricenoia server on a EC2 virtual server:

http://domU-12-31-33-00-02-6A.usma1.compute.amazonaws.com/

Even when I see clearly that is a lot faster than our dedicated server, sometimes has some hang-ups for a second or two. I've had still no chance to locate the problem, but looks a bit odd. Actually, that is the only thing that worries me about EC2.